In today's rapidly evolving digital landscape, the difference between success and failure often comes down to the quality of the underlying technology platform. Organizations need systems that can scale seamlessly, adapt quickly to changing requirements, and maintain the highest levels of security and reliability. At Bridges, we've spent years perfecting our approach to building cloud-native platforms that serve as the foundation for digital transformation success.

Our platform development philosophy is built on three core principles: security by design, scalability from day one, and operational excellence throughout the entire lifecycle. These principles guide every decision we make, from initial architecture design through deployment and ongoing operations. The result is platforms that not only meet today's requirements but are positioned to evolve and grow with our clients' businesses.

The journey from code to cloud involves numerous technical and strategic decisions that can have profound impacts on long-term success. Technology choices made early in the development process can either enable or constrain future growth, integration capabilities, and operational efficiency. Our experience building platforms across diverse industries has taught us which approaches deliver sustainable value and which create technical debt that becomes increasingly expensive over time.

This behind-the-scenes look at our platform development approach provides insights into how we balance competing requirements, make technology choices, and implement the processes that ensure consistent delivery of high-quality solutions. Whether you're evaluating technology partners or planning your own platform development initiatives, understanding these principles and practices can help you make more informed decisions and achieve better outcomes.

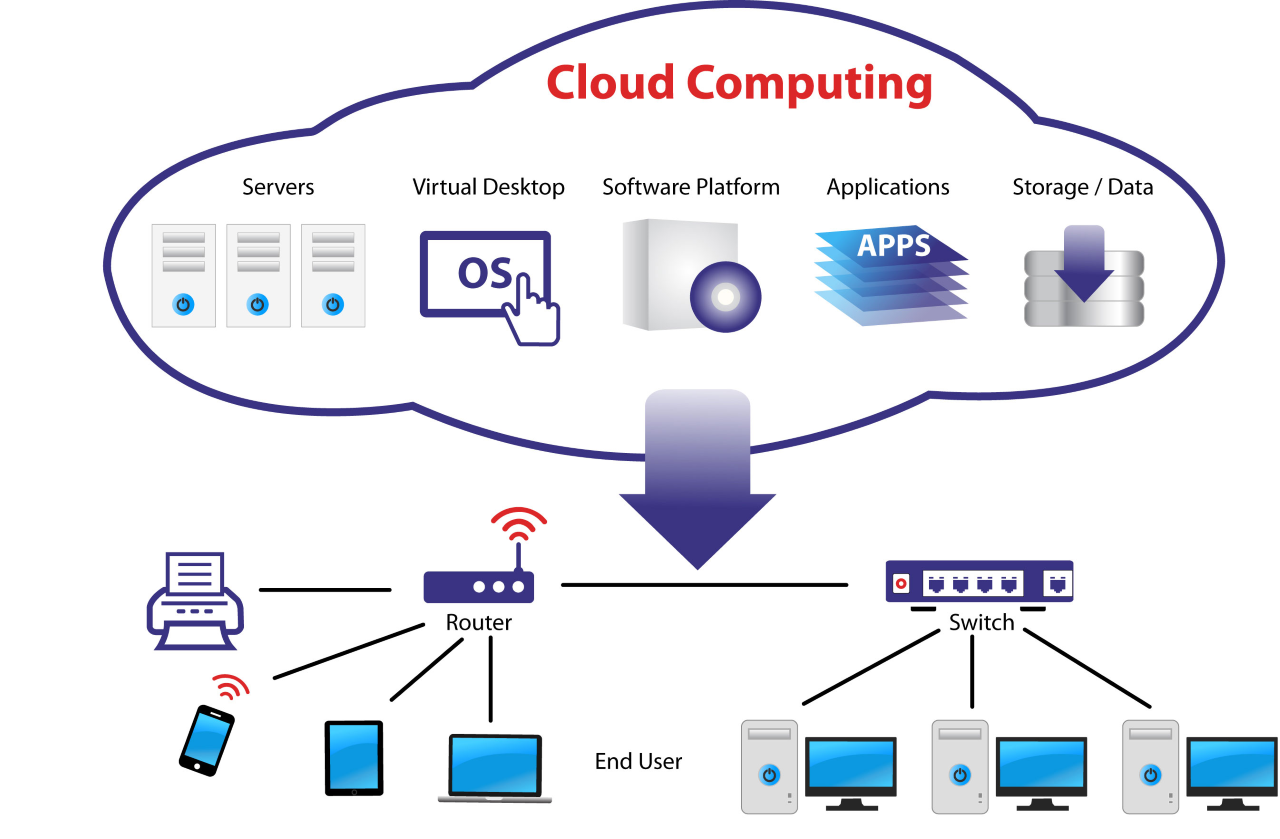

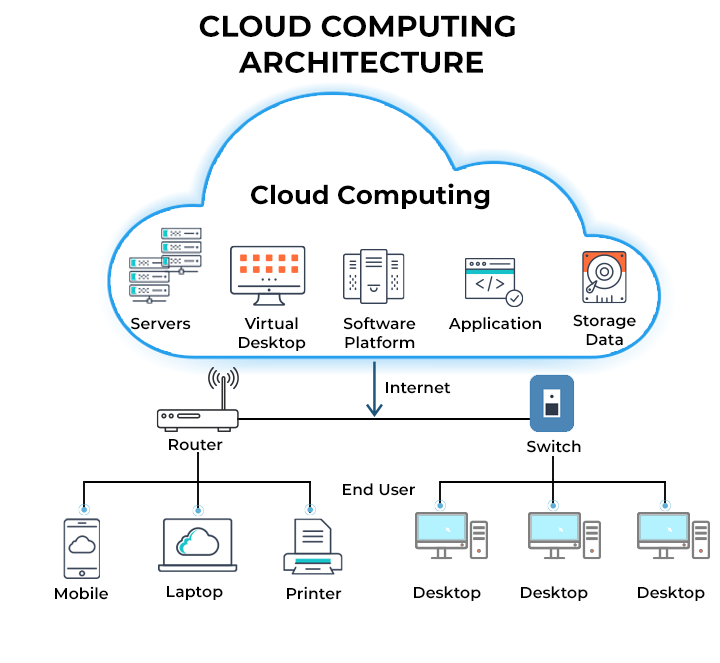

Cloud-native architecture represents more than just deploying applications to cloud infrastructure—it's a fundamental approach to designing systems that take full advantage of cloud capabilities while addressing the unique challenges of distributed computing environments.

Our platform architecture is built on microservices principles that enable independent development, deployment, and scaling of individual components. This approach provides several critical advantages over traditional monolithic architectures.

First, microservices enable teams to work independently on different components without creating dependencies that slow development and increase coordination overhead. Each service can be developed using the most appropriate technologies and can evolve at its own pace based on specific requirements and constraints.

Second, microservices provide operational flexibility that enables fine-grained scaling and resource optimization. Rather than scaling entire applications, we can scale individual services based on demand patterns, ensuring efficient resource utilization and cost optimization.

Third, microservices improve system resilience by isolating failures and enabling graceful degradation. When one service experiences problems, other services can continue operating, and the system can maintain partial functionality rather than experiencing complete outages.

However, microservices also introduce complexity in areas such as service discovery, inter-service communication, and distributed transaction management. Our architecture addresses these challenges through careful service design, comprehensive monitoring, and robust operational procedures.

Containerization provides the foundation for our microservices architecture, enabling consistent deployment environments and efficient resource utilization. We use Docker containers to package applications and their dependencies, ensuring that services run consistently across development, testing, and production environments.

Kubernetes serves as our container orchestration platform, providing automated deployment, scaling, and management of containerized applications. Kubernetes enables us to implement sophisticated deployment strategies, including blue-green deployments, canary releases, and rolling updates that minimize downtime and risk.

Our Kubernetes implementations include comprehensive monitoring, logging, and alerting systems that provide visibility into system performance and enable proactive problem resolution. We also implement robust security policies that control access to resources and ensure that containers run with minimal privileges.

Service mesh technology, typically implemented using Istio or similar platforms, provides additional capabilities for service-to-service communication, including traffic management, security policies, and observability features that are essential for managing complex microservices environments.

Every platform we build is designed with APIs as first-class citizens, enabling seamless integration with other systems and supporting the development of new applications and services. Our API-first approach ensures that all functionality is accessible programmatically, supporting automation, integration, and ecosystem development.

We implement comprehensive API management capabilities that include authentication, authorization, rate limiting, and monitoring. These capabilities ensure that APIs are secure, reliable, and performant while providing the visibility necessary for ongoing optimization and troubleshooting.

API versioning strategies enable us to evolve APIs over time while maintaining backward compatibility for existing integrations. We use semantic versioning and deprecation policies that provide clear guidance for API consumers while enabling continuous improvement and innovation.

Documentation and developer experience are critical components of our API strategy. We provide comprehensive documentation, interactive testing tools, and code samples that enable developers to integrate quickly and effectively with our platforms.

Security is not an afterthought in our platform development process—it's a fundamental design principle that influences every architectural decision and implementation detail. Our security-by-design approach addresses threats at multiple levels and implements defense-in-depth strategies that provide robust protection against evolving security challenges.

Our security model is built on zero trust principles that assume no implicit trust based on network location or user identity. Every request is authenticated, authorized, and encrypted, regardless of its source or destination.

Identity and access management (IAM) systems provide centralized authentication and authorization capabilities that integrate with existing organizational systems while supporting modern authentication methods such as multi-factor authentication and single sign-on.

Network segmentation and micro-segmentation limit the scope of potential security breaches by controlling traffic flow between services and implementing least-privilege access policies. Software-defined networking enables dynamic policy enforcement that adapts to changing security requirements.

Continuous monitoring and threat detection systems analyze network traffic, user behavior, and system activities to identify potential security threats in real-time. Machine learning algorithms help identify anomalous behavior that might indicate security incidents or policy violations.

Data protection is a critical component of our security strategy, addressing both regulatory compliance requirements and business risk management objectives. Our approach includes encryption at rest and in transit, data classification and handling policies, and comprehensive audit trails.

Encryption strategies use industry-standard algorithms and key management practices that ensure data confidentiality while maintaining system performance. We implement encryption at multiple levels, including database encryption, file system encryption, and application-level encryption for sensitive data elements.

Data governance frameworks ensure that data is classified appropriately and handled according to its sensitivity level and regulatory requirements. These frameworks include policies for data retention, deletion, and cross-border transfer that address requirements such as GDPR, HIPAA, and other relevant regulations.

Privacy-by-design principles ensure that privacy considerations are addressed throughout the development lifecycle rather than being added as an afterthought. This includes data minimization, purpose limitation, and user consent management capabilities that support privacy compliance and user trust.

Our platforms are designed to support compliance with relevant industry regulations and standards, including SOC 2, ISO 27001, PCI DSS, and others as required by specific client needs and industry requirements.

Comprehensive logging and audit trails capture all system activities, user actions, and administrative changes, providing the visibility necessary for compliance reporting and security incident investigation. Log aggregation and analysis systems enable efficient searching and reporting across distributed systems.

Automated compliance monitoring systems continuously assess system configurations and activities against defined policies and standards, providing real-time alerts when potential compliance issues are detected. These systems help ensure ongoing compliance while reducing the manual effort required for compliance management.

Regular security assessments, including penetration testing and vulnerability scanning, validate the effectiveness of security controls and identify areas for improvement. We work with third-party security firms to conduct independent assessments that provide objective validation of our security posture.

Building platforms that can scale effectively requires careful attention to architecture, technology choices, and operational practices. Our approach to scalability addresses both technical and operational aspects of scale, ensuring that platforms can grow efficiently while maintaining performance and reliability.

Our architecture is designed for horizontal scaling, enabling us to add capacity by deploying additional instances of services rather than upgrading individual servers. This approach provides better cost efficiency and resilience compared to vertical scaling strategies.

Auto-scaling capabilities automatically adjust capacity based on demand patterns, ensuring that platforms can handle traffic spikes while minimizing costs during low-demand periods. Machine learning algorithms analyze historical usage patterns to predict demand and pre-scale resources when appropriate.

Load balancing and traffic distribution systems ensure that requests are distributed efficiently across available resources while providing health checking and failover capabilities that maintain service availability even when individual instances experience problems.

Database scaling strategies include read replicas, sharding, and caching layers that enable databases to handle increasing loads while maintaining performance. We carefully design data models and access patterns to support efficient scaling and minimize cross-shard transactions.

Comprehensive caching strategies reduce latency and improve performance while reducing load on backend systems. We implement caching at multiple levels, including application caches, database query caches, and content delivery networks (CDNs).

Redis and similar in-memory data stores provide high-performance caching capabilities for frequently accessed data and session information. These systems are configured with appropriate eviction policies and replication strategies that balance performance with data consistency requirements.

CDN integration ensures that static content and frequently accessed dynamic content are delivered from edge locations close to users, reducing latency and improving user experience. CDN configurations include cache invalidation strategies that ensure content freshness while maximizing cache hit rates.

Application-level caching strategies are designed to minimize database queries and expensive computations while maintaining data consistency. We use cache-aside, write-through, and write-behind patterns as appropriate for different use cases and consistency requirements.

Comprehensive performance monitoring provides visibility into system behavior and enables proactive optimization before performance problems impact users. Our monitoring strategy includes application performance monitoring (APM), infrastructure monitoring, and user experience monitoring.

Real-time metrics and alerting systems provide immediate notification of performance issues while historical data analysis enables trend identification and capacity planning. Machine learning algorithms help identify performance anomalies and predict potential issues before they become critical.

Performance testing and load testing are integrated into our development and deployment processes, ensuring that performance requirements are validated before changes are deployed to production. Automated testing includes both synthetic load testing and chaos engineering practices that validate system resilience.

Continuous optimization processes analyze performance data to identify improvement opportunities and implement optimizations that improve efficiency and reduce costs. This includes database query optimization, code profiling, and infrastructure tuning based on actual usage patterns.

Our platform development process is built on DevOps principles that emphasize collaboration, automation, and continuous improvement. This approach enables us to deliver high-quality software quickly and reliably while maintaining the flexibility to respond to changing requirements.

All infrastructure is defined and managed using infrastructure as code (IaC) principles that ensure consistency, repeatability, and version control for infrastructure changes. We use tools such as Terraform, CloudFormation, and Kubernetes manifests to define infrastructure declaratively.

IaC enables us to create identical environments for development, testing, and production, reducing the risk of environment-specific issues and enabling reliable testing and deployment processes. Version control for infrastructure definitions provides audit trails and enables rollback capabilities when needed.

Automated provisioning and configuration management reduce the time and effort required to create new environments while ensuring that security policies and operational standards are consistently applied. This automation also reduces the risk of human error and configuration drift.

Environment management strategies include ephemeral environments for testing and development that can be created and destroyed on demand, reducing costs while providing developers with isolated environments for experimentation and testing.

Our continuous integration and continuous delivery (CI/CD) pipelines automate the entire software delivery process from code commit through production deployment. These pipelines include automated testing, security scanning, and deployment processes that ensure consistent quality and security.

Automated testing strategies include unit tests, integration tests, end-to-end tests, and performance tests that validate functionality, security, and performance requirements. Test automation is integrated into the development process, providing immediate feedback to developers and preventing defects from reaching production.

Security scanning is integrated into CI/CD pipelines, including static code analysis, dependency vulnerability scanning, and container image scanning. These automated security checks ensure that security issues are identified and addressed early in the development process.

Deployment automation includes blue-green deployments, canary releases, and feature flags that enable safe, controlled rollouts of new functionality. These deployment strategies minimize risk while enabling rapid iteration and continuous improvement.

Comprehensive monitoring and observability capabilities provide visibility into system behavior and enable rapid problem resolution when issues occur. Our monitoring strategy includes metrics, logs, and distributed tracing that provide different perspectives on system behavior.

Metrics collection and analysis provide quantitative insights into system performance, resource utilization, and business outcomes. We use time-series databases and visualization tools to analyze trends and identify patterns that inform optimization and capacity planning decisions.

Centralized logging aggregates logs from all system components, enabling efficient searching and analysis across distributed systems. Log analysis tools help identify error patterns, security events, and performance issues that might not be apparent from metrics alone.

Distributed tracing provides visibility into request flows across microservices, enabling identification of performance bottlenecks and error sources in complex distributed systems. Tracing data helps optimize service interactions and troubleshoot issues that span multiple services.

Our technology choices are driven by requirements for reliability, performance, security, and maintainability. We select proven technologies that have strong community support and clear upgrade paths while avoiding vendor lock-in that could limit future flexibility.

We use multiple programming languages and frameworks based on specific requirements and team expertise. Our primary languages include Python, Node.js, Go, and Java, each selected for specific use cases and performance requirements.

Python is our preferred language for data processing, machine learning, and rapid prototyping applications. Its extensive ecosystem of libraries and frameworks enables rapid development while maintaining code quality and maintainability.

Node.js is used for real-time applications and APIs that require high concurrency and low latency. Its event-driven architecture and extensive package ecosystem make it well-suited for building scalable web services and real-time communication systems.

Go is used for system-level services and applications that require high performance and low resource consumption. Its built-in concurrency support and efficient compilation make it ideal for building microservices and infrastructure components.

Java remains important for enterprise applications that require robust transaction processing and integration with existing enterprise systems. The Java ecosystem provides mature frameworks and tools that support complex business logic and enterprise integration requirements.

Our data management strategy includes both relational and NoSQL databases selected based on specific use cases and requirements. We use PostgreSQL for transactional workloads that require ACID compliance and complex queries, while MongoDB and Redis serve specialized use cases.

PostgreSQL provides robust relational database capabilities with excellent performance, reliability, and feature completeness. Its support for JSON data types and advanced indexing capabilities makes it suitable for both traditional relational and semi-structured data use cases.

MongoDB is used for document-oriented data and applications that require flexible schema evolution. Its horizontal scaling capabilities and rich query language make it suitable for content management and catalog applications.

Redis serves as both a caching layer and a data store for real-time applications that require low-latency data access. Its support for various data structures and pub/sub messaging makes it valuable for session management, real-time analytics, and message queuing.

Data pipeline and ETL capabilities are built using Apache Kafka, Apache Airflow, and custom processing services that enable real-time and batch data processing. These tools support data integration, transformation, and analysis workflows that power business intelligence and machine learning applications.

We design platforms to be cloud-agnostic while taking advantage of specific cloud provider capabilities when appropriate. Our primary cloud platforms include AWS, Azure, and Google Cloud Platform, each offering unique capabilities and services.

AWS provides the broadest range of services and the most mature ecosystem, making it our preferred platform for complex, multi-service applications. Services such as EKS, RDS, and Lambda enable us to build sophisticated applications while leveraging managed services that reduce operational overhead.

Azure integration is important for clients with existing Microsoft ecosystems, providing seamless integration with Active Directory, Office 365, and other Microsoft services. Azure's hybrid cloud capabilities also support clients with on-premises infrastructure requirements.

Google Cloud Platform offers advanced machine learning and data analytics capabilities that are valuable for AI-powered applications. Services such as BigQuery, Cloud ML Engine, and Kubernetes Engine provide powerful capabilities for data-intensive applications.

Multi-cloud strategies enable us to avoid vendor lock-in while taking advantage of best-of-breed services from different providers. Container orchestration and infrastructure as code enable portability across cloud platforms when required.

Quality assurance is integrated throughout our development process rather than being treated as a separate phase. Our testing strategies include multiple levels of validation that ensure functionality, performance, security, and reliability requirements are met.

Comprehensive test automation reduces the time and effort required for quality assurance while improving test coverage and consistency. Our testing pyramid includes unit tests, integration tests, and end-to-end tests that validate different aspects of system behavior.

Unit testing frameworks validate individual components and functions in isolation, ensuring that code changes don't introduce regressions and that new functionality works as expected. We maintain high test coverage standards and use test-driven development practices when appropriate.

Integration testing validates interactions between components and services, ensuring that APIs work correctly and that data flows properly through the system. These tests use realistic test data and simulate production-like conditions to identify integration issues early.

End-to-end testing validates complete user workflows and business processes, ensuring that the system works correctly from the user's perspective. These tests are automated using tools such as Selenium and Cypress that can simulate user interactions across web and mobile interfaces.

Performance testing validates that systems meet performance requirements under various load conditions. Load testing, stress testing, and endurance testing help identify performance bottlenecks and validate that systems can handle expected traffic volumes.

Security testing is integrated into our development and deployment processes to ensure that security vulnerabilities are identified and addressed before they reach production. Our security testing strategy includes static analysis, dynamic analysis, and penetration testing.

Static code analysis tools scan source code for security vulnerabilities, coding errors, and compliance violations. These tools are integrated into CI/CD pipelines and provide immediate feedback to developers about potential security issues.

Dynamic application security testing (DAST) tools test running applications for security vulnerabilities such as injection attacks, cross-site scripting, and authentication bypasses. These tests simulate real-world attack scenarios and identify vulnerabilities that might not be apparent from static analysis.

Dependency scanning tools identify known vulnerabilities in third-party libraries and frameworks, enabling us to update or replace vulnerable components before they can be exploited. Automated dependency updates help maintain security while minimizing maintenance overhead.

Regular penetration testing by third-party security firms provides independent validation of security controls and identifies potential vulnerabilities that automated tools might miss. These assessments help ensure that our security measures are effective against real-world threats.

Operational excellence is achieved through systematic approaches to monitoring, incident management, and continuous improvement. Our site reliability engineering (SRE) practices ensure that platforms maintain high availability and performance while enabling rapid innovation and deployment.

Comprehensive monitoring provides visibility into system health, performance, and user experience. Our monitoring strategy includes infrastructure monitoring, application monitoring, and business metrics that provide different perspectives on system behavior.

Infrastructure monitoring tracks server health, resource utilization, and network performance across all system components. These metrics help identify capacity constraints and infrastructure issues before they impact users.

Application monitoring provides insights into application performance, error rates, and user experience metrics. Application performance monitoring (APM) tools track request flows, database queries, and external service calls to identify performance bottlenecks.

Business metrics monitoring tracks key performance indicators that reflect business outcomes and user satisfaction. These metrics help ensure that technical improvements translate to business value and user satisfaction.

Alerting systems provide immediate notification of issues while minimizing alert fatigue through intelligent filtering and escalation policies. Machine learning algorithms help identify anomalous behavior and reduce false positive alerts.

Structured incident response processes ensure that issues are resolved quickly and that lessons learned are incorporated into future improvements. Our incident management approach includes clear escalation procedures, communication protocols, and post-incident review processes.

On-call rotation and escalation procedures ensure that qualified personnel are available to respond to incidents 24/7. Runbooks and automation tools enable rapid problem resolution while reducing the risk of human error during high-stress situations.

Incident communication protocols keep stakeholders informed about issue status and resolution progress. Automated status pages and notification systems provide transparency while reducing the communication overhead during incident response.

Post-incident reviews analyze the root causes of incidents and identify improvements to prevent similar issues in the future. These reviews focus on process and system improvements rather than individual blame, creating a culture of continuous improvement.

Proactive capacity planning ensures that systems can handle growth while maintaining performance and cost efficiency. Our capacity planning process includes trend analysis, load forecasting, and performance modeling.

Historical data analysis identifies growth trends and seasonal patterns that inform capacity planning decisions. Machine learning algorithms help predict future resource requirements based on business growth and usage patterns.

Performance modeling and load testing validate capacity plans and identify potential bottlenecks before they impact production systems. These models help optimize resource allocation and identify the most cost-effective scaling strategies.

Continuous optimization processes analyze system performance and identify opportunities for improvement. This includes database optimization, code profiling, and infrastructure tuning based on actual usage patterns and performance data.

Our platform development approach has delivered measurable results across diverse industries and use cases. These success stories demonstrate the practical benefits of our methodology and provide insights into how our approach addresses real-world challenges.

A leading financial services company needed to modernize their legacy trading platform to support real-time analytics and regulatory reporting while maintaining the reliability and performance that their business demanded.

Our team designed and implemented a cloud-native platform that replaced their monolithic legacy system with a microservices architecture that could scale independently based on trading volume and analytical workloads. The new platform reduced processing latency by 75% while improving system reliability and reducing operational costs.

The implementation included comprehensive security controls that met regulatory requirements while enabling real-time risk management and compliance reporting. API-first design enabled integration with third-party data providers and analytical tools that enhanced trading capabilities.

A healthcare organization required a platform that could securely process and analyze patient data while maintaining HIPAA compliance and supporting research initiatives across multiple institutions.

We built a cloud-native data platform that included comprehensive data governance, privacy controls, and audit capabilities that exceeded HIPAA requirements while enabling advanced analytics and machine learning applications.

The platform's microservices architecture enabled independent scaling of data ingestion, processing, and analysis workloads while maintaining data security and access controls. API-based integration enabled seamless connectivity with existing electronic health record systems and research tools.

A global logistics company needed a platform that could optimize route planning and fleet management across multiple countries while integrating with local transportation management systems and regulatory requirements.

Our team developed a multi-tenant platform that could adapt to local requirements while providing global visibility and optimization capabilities. The platform's AI-powered optimization algorithms reduced transportation costs by 20% while improving delivery performance.

Cloud-native architecture enabled the platform to scale dynamically based on demand patterns while maintaining consistent performance across different geographic regions. Comprehensive monitoring and alerting ensured high availability and rapid problem resolution.

Platform development continues to evolve in response to new technologies, changing business requirements, and lessons learned from successful implementations. Several trends are shaping the future of platform development and influencing our approach to building next-generation systems.

Artificial intelligence and machine learning are becoming integral components of modern platforms, providing capabilities for intelligent automation, predictive analytics, and personalized user experiences.

Our platform architecture includes AI/ML capabilities as first-class citizens, with dedicated services for model training, deployment, and monitoring. MLOps practices ensure that machine learning models can be developed, deployed, and maintained with the same rigor as traditional software components.

Edge computing capabilities enable AI processing closer to data sources and users, reducing latency and improving privacy while enabling real-time decision-making. Our platforms are designed to support hybrid cloud-edge architectures that optimize performance and cost.

Serverless computing and event-driven architectures are enabling new approaches to platform design that improve cost efficiency and operational simplicity while maintaining scalability and reliability.

Function-as-a-Service (FaaS) platforms enable fine-grained scaling and cost optimization for specific workloads while reducing operational overhead. Our platforms incorporate serverless capabilities where appropriate while maintaining the flexibility to use traditional compute resources when needed.

Event-driven architectures enable loose coupling between services and support real-time processing and integration patterns that improve system responsiveness and flexibility. Event streaming platforms such as Apache Kafka provide the foundation for building reactive, scalable systems.

Environmental sustainability is becoming an important consideration in platform design, driving adoption of energy-efficient technologies and optimization strategies that reduce carbon footprint while maintaining performance.

Our platform designs include energy efficiency considerations such as optimized resource utilization, efficient algorithms, and sustainable infrastructure choices. Cloud provider sustainability initiatives and renewable energy programs are factored into our platform deployment strategies.

Carbon footprint monitoring and optimization tools help organizations understand and reduce the environmental impact of their technology platforms while maintaining business objectives and performance requirements.

At Bridges, we combine deep technical expertise with proven methodologies to deliver platforms that serve as the foundation for digital transformation success. Our approach addresses both immediate requirements and long-term strategic objectives while ensuring security, scalability, and operational excellence.

We begin every platform engagement with a thorough assessment of business requirements, technical constraints, and strategic objectives. This assessment informs our platform architecture decisions and ensures that technology choices are aligned with business goals.

Our platform strategy development process includes detailed analysis of scalability requirements, integration needs, security requirements, and operational constraints. We help organizations understand the trade-offs between different architectural approaches and select the strategy that best meets their specific needs.

Our technical team has extensive experience implementing complex platforms across diverse industries and technical environments. We combine deep expertise in cloud technologies with proven project management methodologies to ensure successful delivery.

Our implementation approach emphasizes collaboration with client teams, knowledge transfer, and capability building that enables organizations to maintain and evolve their platforms over time. We provide comprehensive documentation, training, and ongoing support to ensure long-term success.

Platform development is an ongoing process that requires continuous optimization and evolution to meet changing requirements and take advantage of new technologies. We provide comprehensive support services that include performance monitoring, security updates, and feature enhancements.

Our optimization approach includes regular platform assessments, performance tuning, and technology updates that ensure platforms continue to deliver value while maintaining security and reliability. We also help organizations plan and implement platform evolution strategies that support business growth and changing requirements.

Building secure, scalable platforms requires a comprehensive approach that addresses architecture, security, operations, and ongoing evolution. Success depends on making informed technology choices, implementing robust development and deployment processes, and maintaining a focus on business outcomes throughout the platform lifecycle.

Our experience has shown that the most successful platforms are those that balance technical excellence with business pragmatism, providing the capabilities needed today while maintaining the flexibility to evolve and grow over time. This requires careful attention to architecture, technology selection, and operational practices that support both immediate requirements and long-term strategic objectives.

Platform development is ultimately about enabling business success through technology. The best platforms are those that become invisible to users while providing the reliability, performance, and capabilities that enable organizations to achieve their strategic objectives and serve their customers effectively.

Don't settle for platforms that limit your potential. At Bridges, we help organizations build secure, scalable platforms that serve as the foundation for digital transformation success. Our proven methodologies, technical expertise, and commitment to operational excellence ensure that your platform delivers the capabilities you need today while positioning you for future growth and innovation.

Whether you're modernizing legacy systems, building new digital capabilities, or planning your next-generation platform architecture, our team can help you navigate the complexities of modern platform development while avoiding the pitfalls that derail many initiatives.

Ready to build a platform that scales with your ambitions? Contact Bridges today to schedule a consultation and discover how our proven approach to platform development can help you achieve your digital transformation objectives while building the foundation for long-term success.

About Bridges: Bridges is a leading digital transformation company specializing in AI, automation, fleet management, finance, and logistics solutions. Based in Dubai, UAE, we help organizations across the Middle East and beyond build secure, scalable platforms through proven methodologies and expert technical guidance. Learn more at www.thebridges.io.

Mastering cloud platform development with secure cloud architecture, scalable design, and DevOps best practices—building the future, one resilient system at a time

Lorem ipsum dolor sit amet, consectetur adipiscing elitDuis consequat mauris. Vivamus sed velit id metus vehicula lobort imperdiet sollicitudin imperdieMaecenas purus lorem, sagittis

Lorem ipsum dolor sit amet, consectetur adipiscing elitDuis consequat mauris. Vivamus sed velit id metus vehicula lobort imperdiet sollicitudin imperdieMaecenas purus lorem, sagittis

Cloud-native architecture enables unprecedented scalability, reliability, and operational efficiency.

Cloud-native architecture represents more than just deploying applications to cloud infrastructure—it's a fundamental approach to designing systems that take full advantage of cloud capabilities while addressing the unique challenges of distributed computing environments.